SupTech starts with data: Building strong and flexible data foundations

by Ayushi Misra, Debarshi Chakraborty, Kenneth Rudy Gomes and Duenna Dsouza

by Ayushi Misra, Debarshi Chakraborty, Kenneth Rudy Gomes and Duenna Dsouza Nov 6, 2025

Nov 6, 2025 5 min

5 min

This first blog in our new two-part series reveals that effective SupTech begins with robust data systems. It draws on research by MSC and Cambridge and shows how poor data quality, weak standardization, and limited infrastructure undermine supervision. It urges regulators to strengthen data foundations before they adopt advanced SupTech tools.

“Regulator blames lack of timely data reporting for recent multi-million-dollar losses in the banking sector.”

This stark headline on HSBC’s GBP-57.4 million fine reveals an unpalatable reality: Even the world’s major banks, equipped with advanced systems, can suffer costly outcomes due to gaps in data accuracy and reporting. Failure to properly identify eligible customer deposits has exposed significant weaknesses in data handling, which has led to heavy regulatory penalties and hurt customer trust.

Such challenges multiply in developing or underdeveloped countries that have weaker data infrastructure and less mature supervisory technologies. Here, the risks compound and make financial stability even harder to safeguard.

This is precisely where supervisory technology, or SupTech, can emerge as a panacea. It uses analytics to transform messy, incomplete data into clear, actionable insights that help regulators and firms spot threats and manipulations faster than traditional, manual methods.

A leading example is Singapore’s Monetary Authority, which developed “Apollo,” an AI-powered system that analyzes vast volumes of trading data to detect subtle patterns of market manipulation that humans often miss. Apollo learns from expert investigators, which allows regulators to focus on the riskiest cases and illustrates how AI-driven SupTech reshapes market supervision. However, even the most advanced solutions depend fundamentally on high-quality data. Without timely, consistent, and complete data, these tools cannot yield reliable insights.

For regulators, especially in emerging markets, strong and adaptable data systems are imperative and serve as the very foundation of effective, future-ready financial supervision that can prevent crises and protect markets.

Global adoption: Steady progress but uneven readiness

While SupTech adoption is increasing worldwide, progress remains uneven. MSC’s work with regulators across Asia-Pacific and Africa shows that while many authorities are interested, their level of readiness varies. A Cambridge SupTech Lab’s 2024 survey shows that 75% of advanced economy regulators and 58% of emerging market authorities now use one or more SupTech or RegTech tools, a gap that has narrowed from 25% in 2023 to 17% in 2024. But behind these encouraging numbers lies a deeper challenge, the unequal readiness of data systems that underpin SupTech initiatives.

MSC’s country-based studies reveal that many lower-income nations are still in a transitional stage of digital supervision, where some automation exists but manual processes continue to dominate. In the Pacific region, for example, several central banks have launched pilot dashboards for data analysis, but much of the input still comes from manual submissions and non-standard templates. This limits both scalability and consistency.

Our diagnostics also show that weak digital infrastructure, inconsistent data definitions, and unclear data governance frameworks are often bigger obstacles than funding. In short, the adoption of SupTech tools does not always mean being ready for them. In this context, “ready” refers to the availability of robust data foundations, including clarity, consistency, quality, and governance of data. Many regulators may have access to these systems but lack the data foundations needed to use them effectively or expand them sustainably.

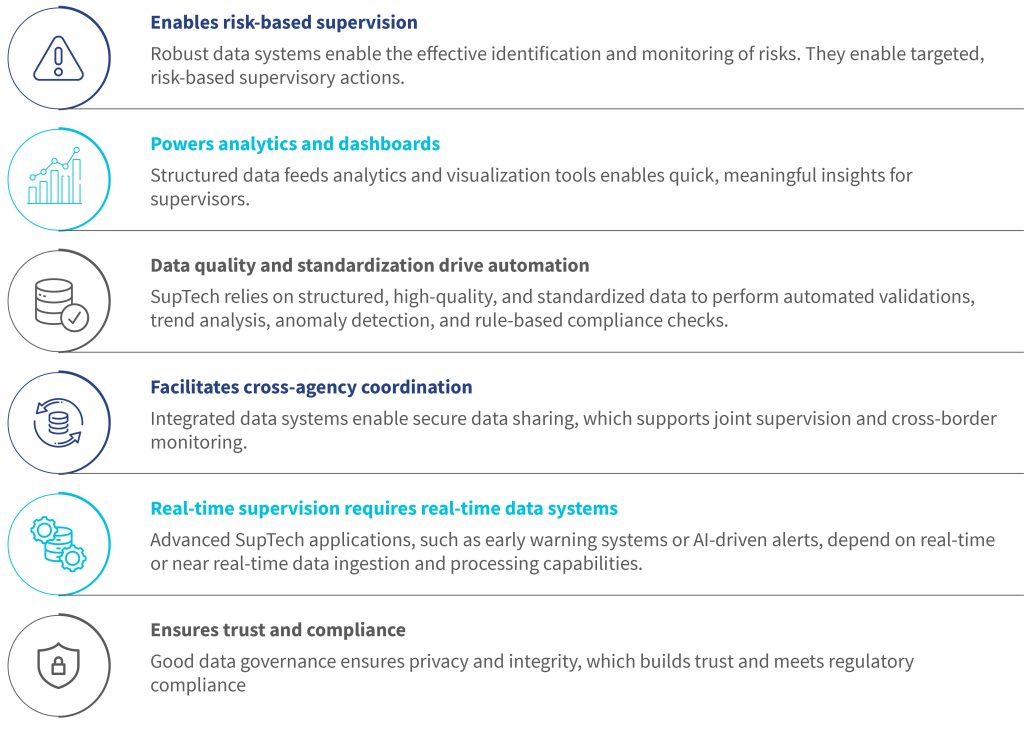

Robust and integrated data systems are the foundation upon which effective SupTech supervision is built, powering automation, real-time oversight, and risk-based actions as shown below.

Why strong data systems matter

SupTech begins with technology, but its true power depends on data, and that is where the foundation for effective supervision is built. Strong data systems sit at the heart of every effective supervisory function. They process high-frequency granular information automatically, detect risks the moment they surface, and give supervisors the confidence to act based on hard evidence. They ensure that every regulated entity reports consistently and comparably, and that sensitive information is stored securely while remaining accessible to those who need it.

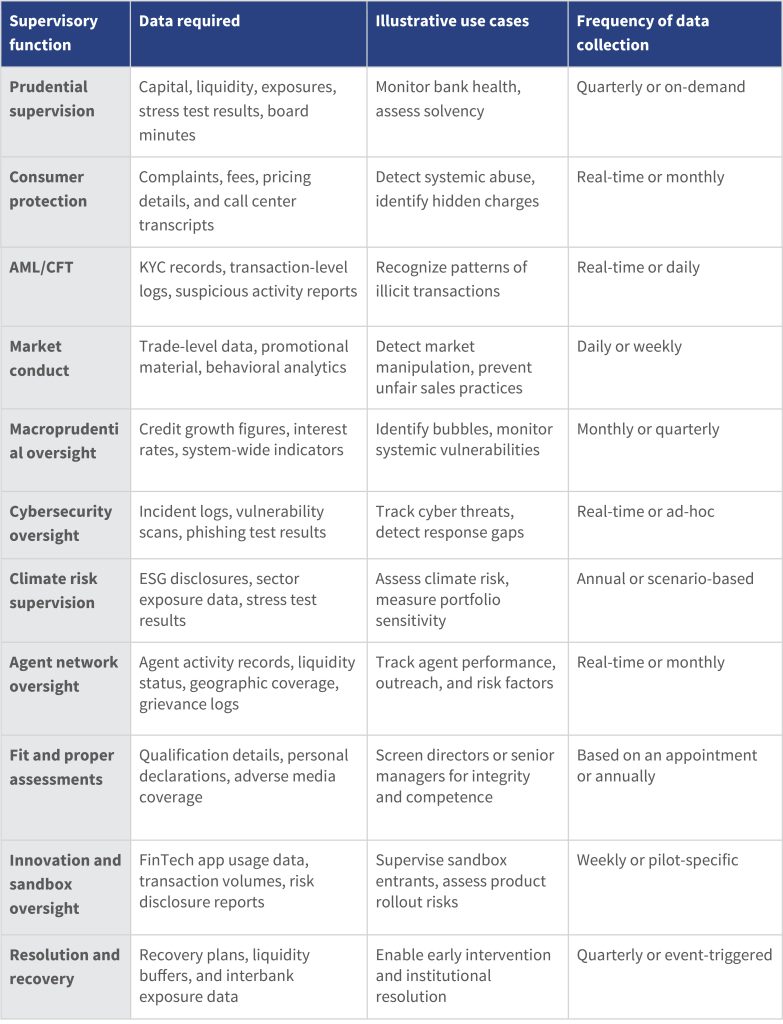

Table 1: Varying data requirements of supervisory functions

Core challenge: Weak data foundations

Across less digitally mature markets, MSC’s data maturity assessments have identified three interlinked gaps that frequently delay SupTech transformation. Figures based on MSC’s data are derived from direct surveys of Pacific Island regulators conducted in 2023 as part of our data maturity assessment work. Cambridge figures are cited for global context. While these overlap with global patterns described in the Cambridge SupTech Lab’s work, our regional evidence provides sharper insights into their practical consequences.

Data quality gaps

Manual and spreadsheet-based reporting still dominate. MSC’s Pacific SupTech readiness survey (2025) found that one-third of regulators rely on manual submissions. This finding echoes Cambridge’s 2023 global trend, which shows more than half of authorities still handle manual data and nearly three-quarters validate it manually. These overlapping findings underscore how poor-quality and manual data weakens supervisory confidence and delays risk detection.

Lack of standardization

MSC’s diagnostics across small islands and low-income economies show that few regulators have consistent taxonomies or standardized data dictionaries across departments. While only 14% of regulators globally report full data standardization, the figure drops even lower, below 10%, in smaller jurisdictions that depend on legacy reporting formats. The absence of common definitions forces supervisors to clean and reconcile data after submission, which slows decision-making, undermines cross-entity comparisons and systemic risk analysis, and makes it inefficient or impossible. As a result, any SupTech solution built on this data risks being weakened.

Infrastructure constraints

Even as some authorities explore cloud-based SupTech pilots, in-house or private server storage still accounts for more than half of supervisory data systems in the Pacific, as per MSC’s research. This reliance on in-house systems protects confidentiality but creates silos that block data sharing and integration with modern analytics tools.

While Cambridge reports low global adoption of cloud storage (31.5%), MSC’s Pacific survey reveals a significant and continued dependence on in-house and private servers across the region, which sometimes exceeds 50% in individual countries. These regional figures may appear to contradict global studies, but they are consistent when viewed in terms of maturity. Many jurisdictions remain at the transitional stage, where cloud experimentation has begun, but full adoption is still limited.

Why a direct jump to SupTech is risky

The temptation to invest in the latest tools is understandable. MSC’s research, supported by findings from CCAF and OECD, shows that technological investments often underperform when built without solid data foundations. Ineffective systems yield unreliable results, exhaust critical institutional resources, and heighten exposure to operational and reputational vulnerabilities. In the absence of dependable data, even the most advanced SupTech initiatives can turn into costly trials.

Therefore, the data shows that before regulators allocate funds to advanced SupTech platforms or infrastructure, they should follow a gradual, capability-based strategy aligned with the maturity of their data ecosystems. The gradual implementation of SupTech solutions promotes smoother implementation, enhances value realization, and prevents costly setbacks. Without this foundation, institutions risk pouring resources into advanced technologies without addressing core weaknesses in data systems, which would ultimately drain institutional capacity and weaken the stability of the financial system.

In our next blog, we will explore the building blocks of effective data systems and MSC’s framework, which defines what constitutes basic, intermediate, and advanced maturity levels, and showcase how central banks use these stages to implement scalable and future-ready SupTech solutions.

Written by

Ayushi Misra

Senior Manager

Debarshi Chakraborty

Senior Principal Architect

Kenneth Rudy Gomes

Analyst

Leave comments