Can AI help with locally-led adaptation? The challenges.

by Graham Wright, Kunjbihari Daga and Debarshi Chakraborty

by Graham Wright, Kunjbihari Daga and Debarshi Chakraborty Feb 6, 2024

Feb 6, 2024 6 min

6 min

While digital advancements surge, many climate-vulnerable communities remain on the analog sidelines. Locally-led adaptation offers hope, empowering those most affected to craft solutions. But crafting these plans requires navigating complex data on policy, climate, agriculture, and more. Here, AI emerges as a potential tool to analyze this information, but only if it is trained on inclusive, accurate data. This is one among several issues this blog examines as we look toward a more sustainable future for all.

A world split by the digital divide

Despite the dramatic spread of digital technology, much of the global south continues to fall behind in its adoption and use. Shockingly, only 13% of smallholder farmers in Sub-Saharan Africa have registered for any digital service, and only 5% actively use them. In 2017, MSC documented the reasons why poor people fail to access digital technologies. For women, social factors magnify these barriers.

As a result, many of the communities most vulnerable to climate change are excluded and unable to participate in the digital revolution. This deprives them of opportunities to access critical information, financial services, key inputs, and collaboration. We need to enlist, train, and deploy a range of community-focused players to help vulnerable communities use the growing array of valuable digital tools to optimize their locally-led adaptation (LLA) planning, implementation, and governance. These players could include the staff of community-based organizations, financial service providers with reach into remote rural areas, agricultural extension workers, agriculture input dealers, and cash-in and cash-out (CICO) agents. Indeed, this is probably the only way we can scale up LLA to the levels required by climate change’s rapidly emerging and increasingly debilitating impacts.

Can AI help?

It is immensely appealing to think that AI can play a role in the development, implementation, and oversight of LLA strategies. However, the development of these strategies necessarily requires the identification, analysis, summarization, and communication of a diverse array of information, datasets, and complex ideas. Effective LLA strategies must consider policy and regulation, climate science, ecology, geography, agriculture, health, financial services, and gender, among other factors. AI could potentially play an important role in distilling the key elements and critical success factors from this daunting range of variables.

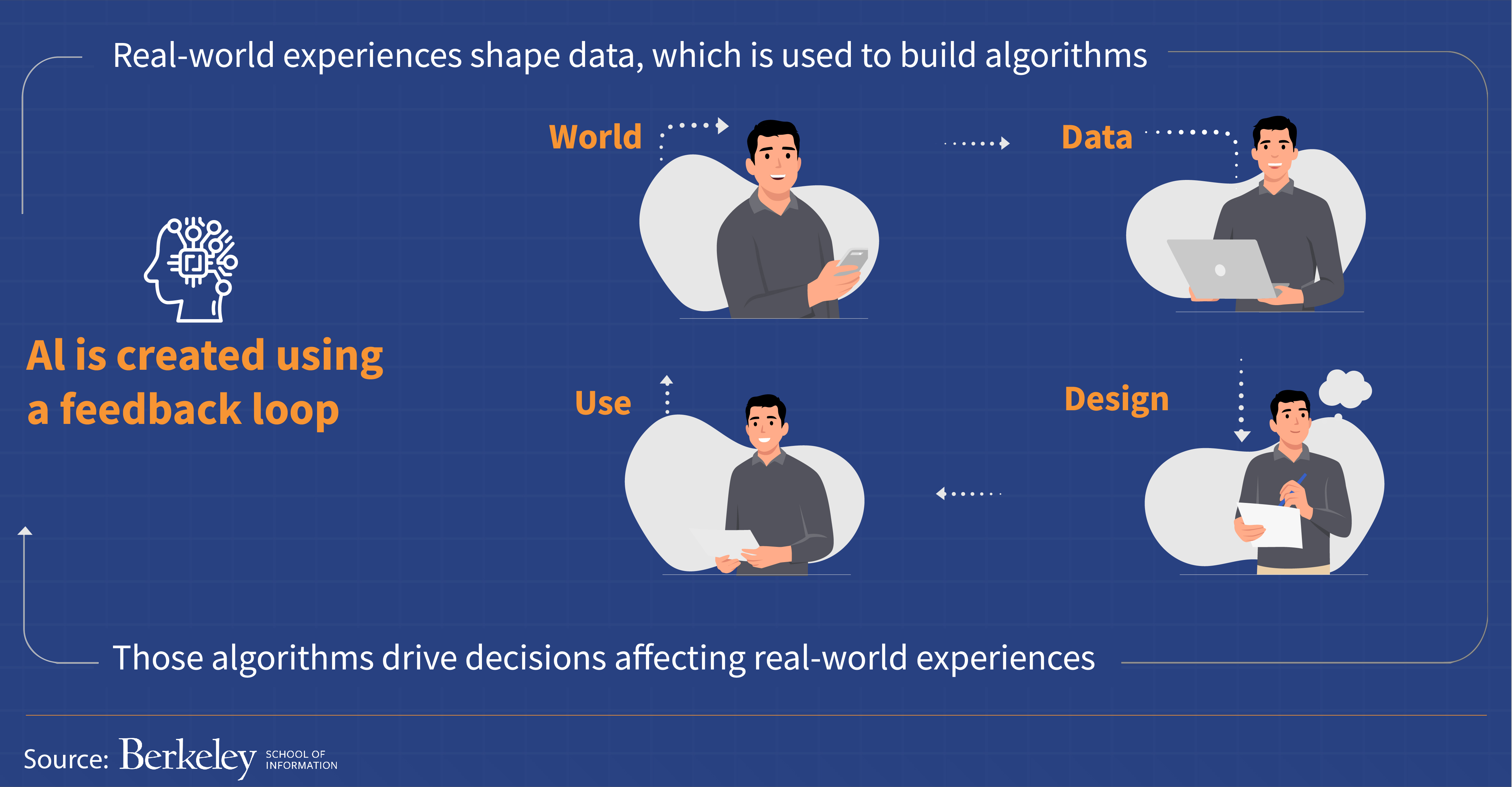

Yet the desire to apply AI to complex problems that have historically remained elusive or irrelevant to most modern technology or digital developments has often made the situation worse. The UC Berkley School of Information has already shown how artificial intelligence bias affects women and people of color. Much of this bias is because of feedback loops built onto the most readily and abundantly available data to train the algorithm.

Yet the desire to apply AI to complex problems that have historically remained elusive or irrelevant to most modern technology or digital developments has often made the situation worse. The UC Berkley School of Information has already shown how artificial intelligence bias affects women and people of color. Much of this bias is because of feedback loops built onto the most readily and abundantly available data to train the algorithm.

The school notes, “AI is created using a feedback loop. Real-world experiences shape data, which is used to build algorithms. Those algorithms drive decisions affecting real-world experiences. This kind of circular reasoning means that bias can infiltrate the AI process in many ways.” These biases will be amplified further for people on the analog side of the digital divide.

If we are to close the digital divide, we will need a highly cautious, context-specific strategy that considers the needs, interests, and capabilities of local participants. The data on which AI is trained is crucial, so if we want to deploy it to assist with LLA, and indeed many development challenges, we must:

- Avoid the imposition of external or top-down solutions and strike a balance between the use of digital technologies even as we respect and acknowledge local expertise, culture, and values;

- Ensure local players, particularly those without the required infrastructure, expertise, or money, can access and use digital technology;

- Resolve the ethical and legal concerns about data ownership, permission, and use, and ensure the reliability, security, and privacy of digital data and systems.

So, what are the implications for digitally-enabled, locally-led adaptation?

Chatbots and natural language processing (NLP) present valuable possibilities to improve access to information for LLA strategies. However, another key limitation amplifies the challenges outlined by the UC Berkeley School of Information: The datasets used to train NLP systems often lack comprehensive coverage of local dialects, native languages, and regional cultural knowledge. When people are stranded on the analog side of the digital divide, it also reinforces that exclusion as algorithms are built and trained on data from those already connected to the digital world. Thus, such algorithms exclude the voices of those who are not connected. We see an instance of such exclusion in the fact that 99% of the world’s online content is limited to only 40 languages.

Limitations in AI technology highlight the digital divide, as experienced by MSC in our recent projects. In India and Bangladesh, we used AI to analyze voice recordings. Despite being trained in the local languages, the NLP systems, developed with commonly available digital voice data, struggled with the dialects and accents of marginalized groups. Additionally, when we attempted to use AI to anticipate responses from rural women for survey follow-up questions, all AI systems failed, as they did not understand these women’s unique challenges.

The guidance offered by large language model AI systems is likely to be either too general or simply not applicable to the local context of many climate-affected communities. Furthermore, the feedback mechanism in the supervised learning process becomes less effective, as it is challenging to measure and correct the extent of inaccuracies or irrelevance in such generalized or inappropriate solutions. These challenges are mutually reinforcing and could lead to lower adoption rates and trust issues regarding the information provided by AI interfaces.

A good example of this arose in MSC’s work with an AI-driven agri-advisory app, which we have been testing with farmers in Bihar. There, we found the following issues:

- Compatibility of the application: We found a wide range of mobile phone models and Android versions, which vary depending on the farmers’ ability to afford them. The lower configuration of the handsets and older versions of Android affect the performance and functionalities that the farmer can avail through the app. This served as an important lesson for us for other digital projects, including the Digital Farmer Services (DFS) platform that MSC has been implementing in Bihar.

- Local dialect: The sensitivity of the voice detection functionality to local dialects is an issue. The app struggled to identify keywords, which led to instances where the farmer needed to provide multiple inputs.

- Maturity of the apps: In the current state of the app, the quality of the prompts decides the quality of the output. If the prompts are not written properly, the farmer gets basic and generic advice, which is not helpful. The app’s responses may not be relevant in some instances, such as when the farmer does not know of a new pest or disease or if its name is in a local dialect that the app cannot understand. Such examples highlight the LLMs’ limitations.

- Appropriate learning data: We wanted to conduct a similar experiment in Bangladesh. Yet, despite the app being already available in West Bengal, which shares a common language with Bangladesh, the cost to retrain the app for Bangladeshi agricultural policies, climatic conditions, value chains, and markets was surprisingly high.

Moreover, significant computing and storage resources are clearly needed to train these models, considering the large volume of data produced in local contexts across a region or geographic area. Additionally, these models may need to be enhanced with more neural nodes to preserve the accuracy of the results. Consequently, the cost of these resources is a major concern—particularly given the remote and “low-value” nature of many vulnerable communities.

Finally, the privacy and security of data significantly increase the challenges. Institutions and governments are still struggling to develop rules, laws, and frameworks for the responsible and ethical use of AI. Given this, communities or local government officials involved in LLA strategies are unlikely to trust a digital platform with their personally identifiable information, especially when they are uncertain about the accuracy of its results. Additionally, while people are still vulnerable to traditional phishing and malware attacks, the emergence of AI-generated deepfakes further complicates and intensifies these security issues.

Conclusion

AI could play an important role to support development initiatives in general and LLA in particular. However, as in all other cases, any AI-based solution or intervention is as good as the relevance and authenticity of the data it is trained on. We will need to make very conscious efforts to include the voices of vulnerable communities, typically on the analog side of the digital divide, if we are to realize the potential of AI. Failure to do so will widen and deepen the divide. This is a challenge on which MSC is working—stay tuned for updates!

Written by

Graham Wright

Chairperson

Kunjbihari Daga

Chief Architect

Leave comments